- #COPY AND UNLOAD TRAFFIC OF YOUR REDSHIFT CLUSTER HOW TO#

- #COPY AND UNLOAD TRAFFIC OF YOUR REDSHIFT CLUSTER UPDATE#

- #COPY AND UNLOAD TRAFFIC OF YOUR REDSHIFT CLUSTER CODE#

If Amazon Redshift and Eltworks Integrator are running on different networks, it is important to enable inbound traffic on port 5439. Typically, the TCP port 5439 is used to access Amazon Redshift. It would also create a single point of failure."Ĭonfigure Redshift Configure the Firewall "A typical CDC Flow can extract data from multiple tables in multiple databases, but having a single Flow pulling data from 55000+ tables would be a major bottleneck as it would be limited to a single blocking queue with a limited capacity. Load data into Amazon Redshift from multiple sources Using HWM replication you can load only new and updated records into Redshift. Setup Change Replication using a high watermark (HWM) You can ETL data from multiple database objects (tables and views) into Redshift by a wildcard name without creating individual source-to-destination transformations. It is important to understand how we map various JDBC data types for the Redshift data types.Ĭreate a connection for the Redshift cluster. The Etlworks Integrator supports executing complex ELT scripts directly in Redshift, which greatly improves the performance and reliability of the data ingestion. Flow type to extract data from Amazon Redshift, transform and load it into any destination.

#COPY AND UNLOAD TRAFFIC OF YOUR REDSHIFT CLUSTER HOW TO#

Watch how to create flows to ETL and CDC data into Amazon Redshift Unlike Bulk load files in S3 into Redshift, this flow does not support automatic MERGE. This flow requires providing the user-defined COPY command. When you need to bulk-load data from the file-based or cloud storage, API, or NoSQL database into Redshift without applying any transformations. When you need to stream messages from the queue which supports streaming into Redshift in real-time. When you need to stream updates from the database which supports Change Data Capture (CDC) into Redshift in real-time. The flow automatically generates the COPY command and MERGEs data into the destination. When you need to bulk-load files that already exist in S3 without applying any transformations. When you need to extract data from any source, transform it and load it into Redshift. Etlworks Integrator includes several flows optimized for Amazon Redshift.

Note that this does not occur when duplicate privileges are granted within the sameĪpplication, as such privileges are de-duplicated before any SQL query is submitted.Amazon Redshift is a fast, fully-managed data warehouse that makes it simple and cost-effective to analyze all your data using standard SQL and your existing Business Intelligence (BI) tools. This leads to the undesirable state where application 2 still contains theĬall to grant but the user does not have the specified permission.

In general, application 1 does not know thatĪpplication 2 has also granted this permission and thus cannot decide not to issue the Now, if application 1 were to remove the call to grant, a REVOKE USER SQL query is

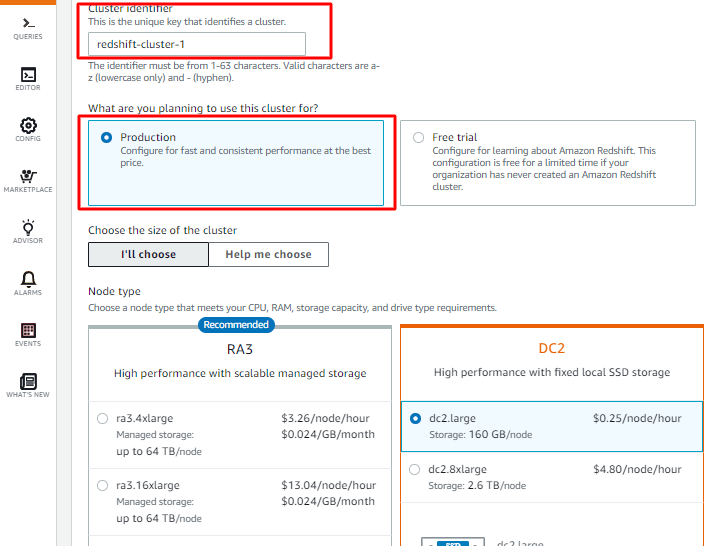

Two calls will have no effect since the user has already been granted the privilege. Submitting a GRANT USER SQL query to the Redshift cluster. import * as ec2 from 'aws-cdk-lib/aws-ec2' Ĭonst cluster = new Cluster( this, 'Redshift', ],īoth applications attempt to grant the user the appropriate privilege on the table by The nodes are always launched in private subnets and are encrypted by default. You can specify a VPC, otherwise one will be created. To set up a Redshift cluster, define a Cluster.

#COPY AND UNLOAD TRAFFIC OF YOUR REDSHIFT CLUSTER CODE#

> your source code when upgrading to a newer version of this package.

#COPY AND UNLOAD TRAFFIC OF YOUR REDSHIFT CLUSTER UPDATE#

This means that while you may use them, you may need to update > not subject to the Semantic Versioning model and breaking changes will be > They are subject to non-backward compatible changes or removal in any future version. The APIs of higher level constructs in this module are experimental and under active development.

0 kommentar(er)

0 kommentar(er)